I led the development and design of this searchable database, which was published in fall 2024, for the Howard Center for Investigative Journalism.

The app is built on a Flask-based framework and displays the thousands of privately sponsored trips that U.S. House of Representatives staffers and members have taken since 2012 — many of which were paid for by nonprofits with ties to lobbyists and special interests.

- Deportations were supposed to target bad guys. Most ICE arrests in Michigan capture non-criminals

- More Michigan kids are not fully vaccinated. See vaccination rates by county, school district

- Michigan Medicaid program faces $15B hit as 'big beautiful bill' affects rural counties

- Metro Detroit's most-blocked railroad crossings frustrate commuters, officials

- Detroit sees more violent crime than D.C. Could Trump send troops here?

- Where Sheffield won in Detroit mayoral primary, where Kinloch might gain ground: analysis

- UMD spent more than $136,000 on event security for Oct. 7, 2024

- English, Spanish among 8 UMD majors with significant enrollment declines since 2012

I used D3.js, Adobe Illustrator and Flourish to create a series of graphics analyzing the United States' use of PFAS, or "forever chemicals." I built a quick Python scraper to get spatial information about PFAS detections from an ArcGIS Online map, and analyzed data from a variety of sources in R to produce this final piece.

These graphics include detailed choropleth and point maps overlaid on each other, an interactive D3 bar chart, a D3 treemap, and static graphics made in Flourish and customized in Adobe Illustrator.

Click here to view this project, which was my final for a data visualization class at the University of Maryland.

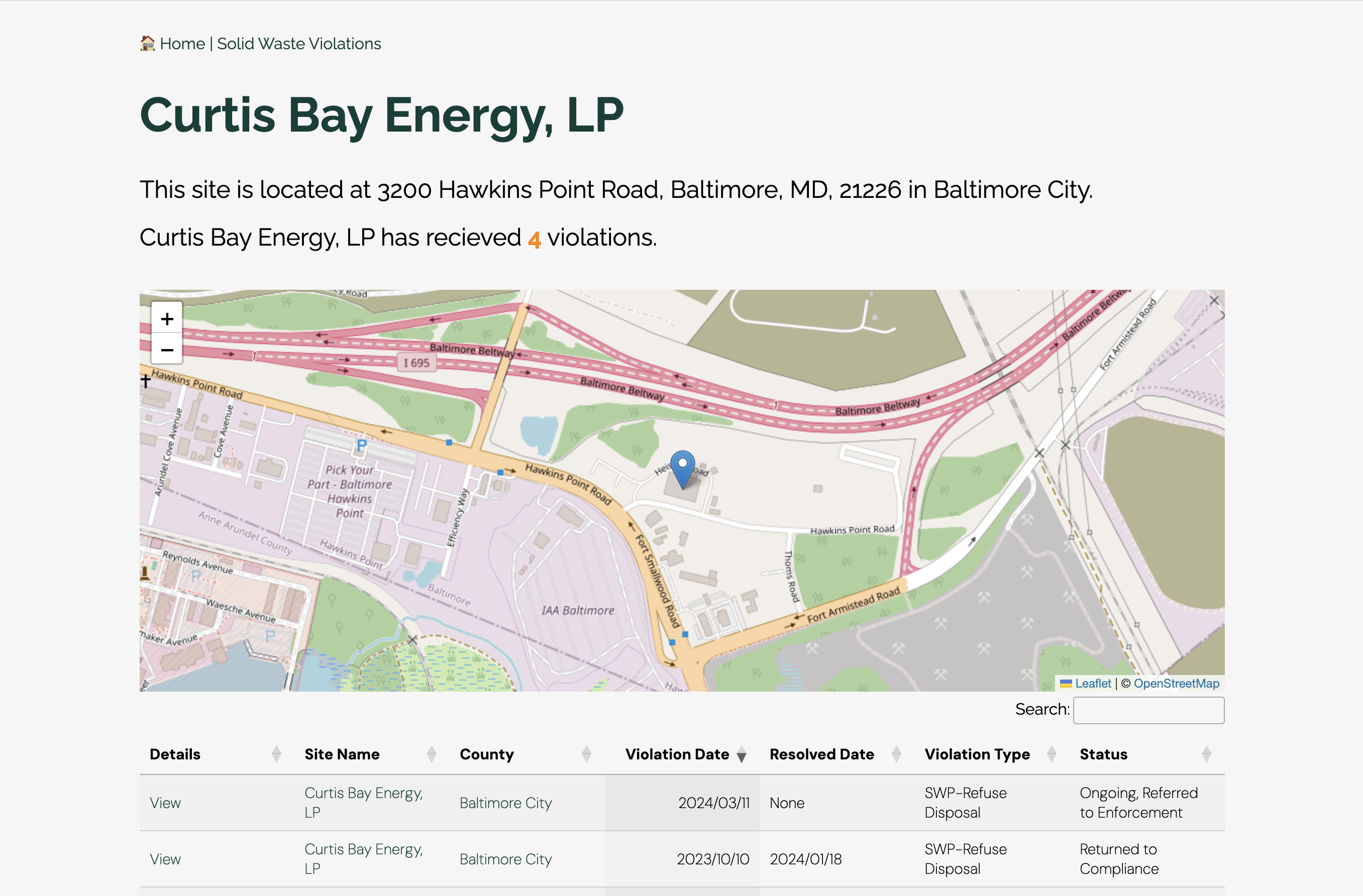

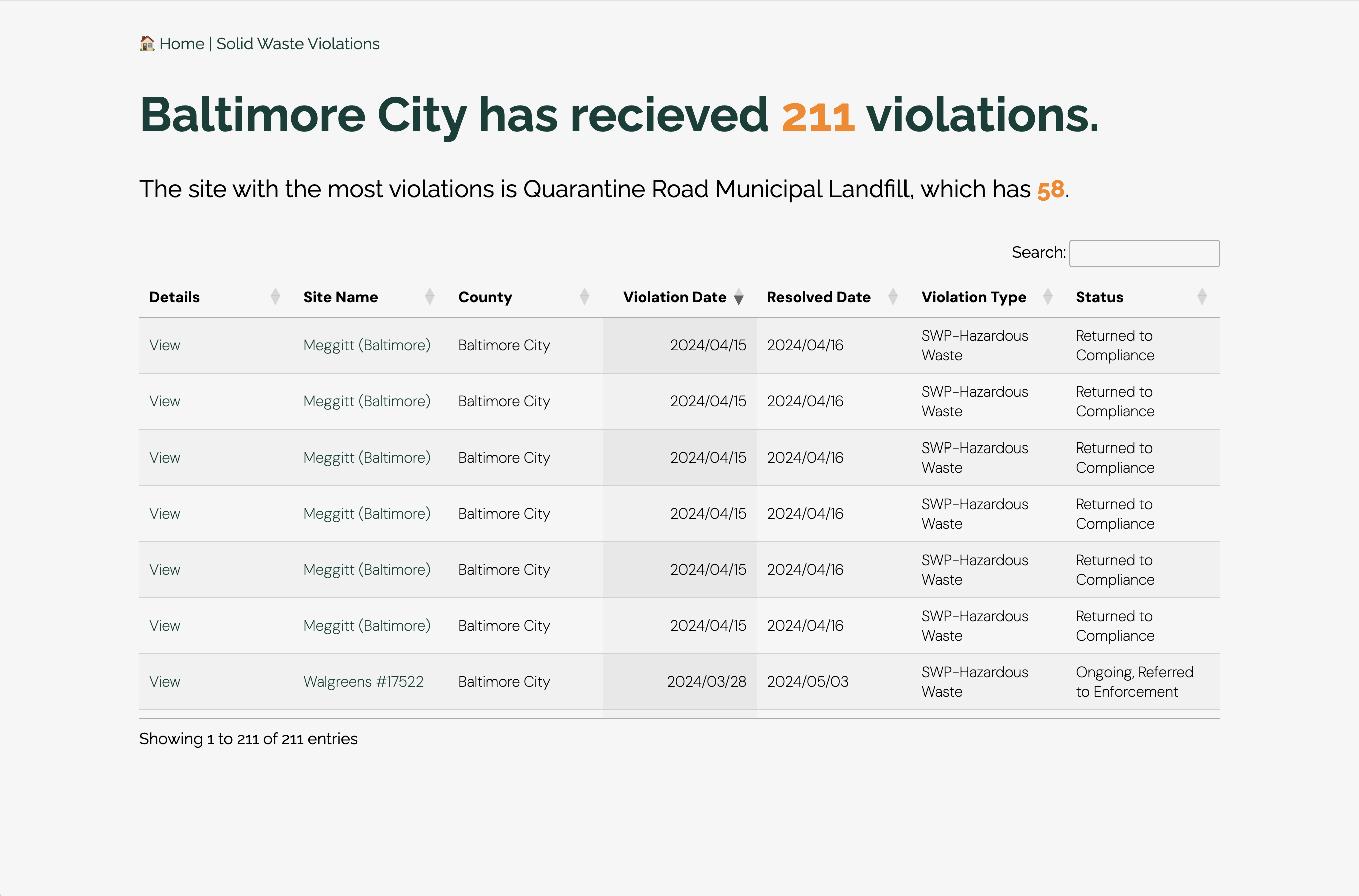

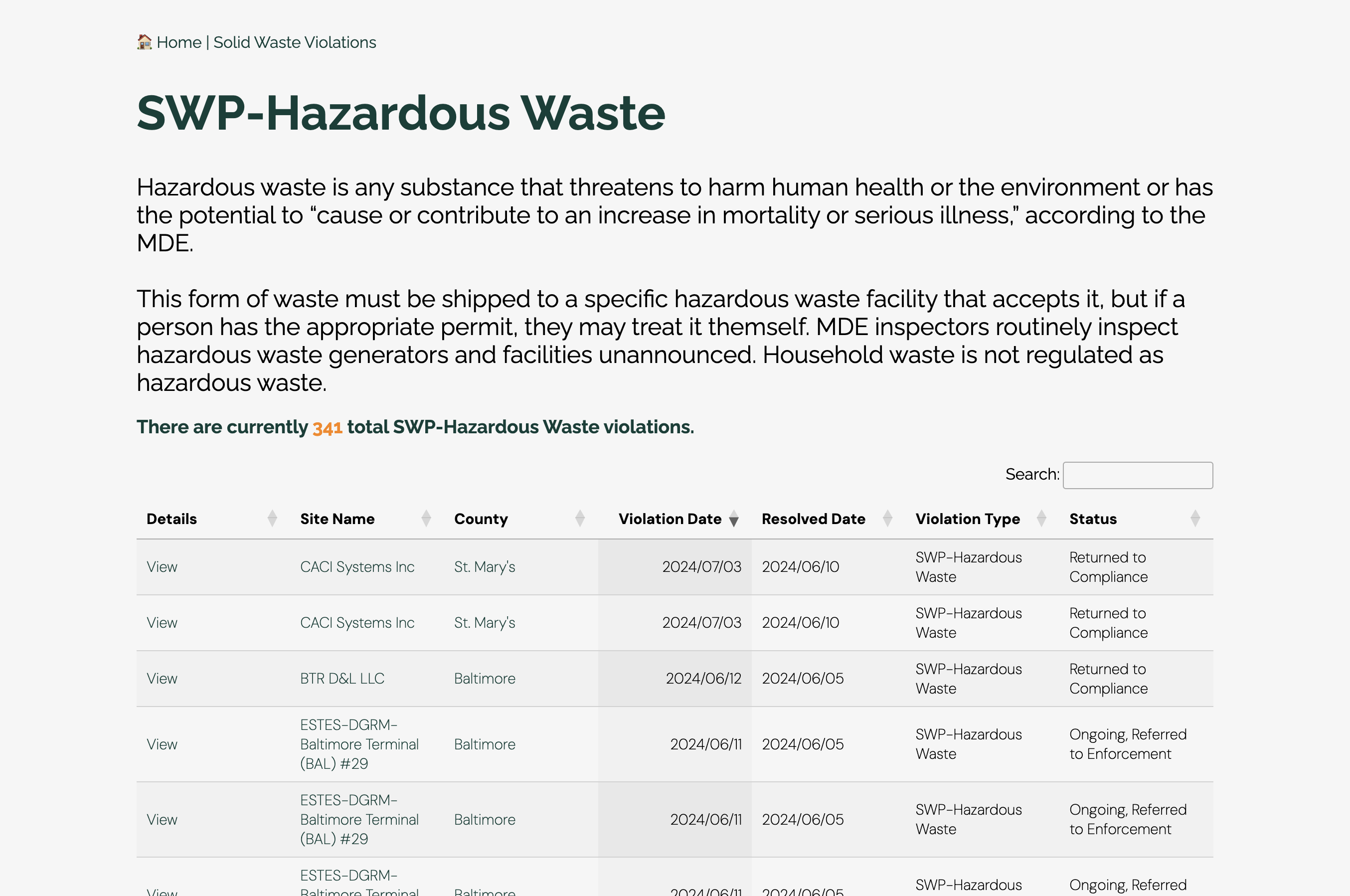

This was my final project for my spring 2024 news applications class as well as the first ever news app I created!

I coded a scraper, cleaned up the data, geocoded the addresses with the geopy Python library and designed and created an app using a Flask framework. My app has a home page as well as pages for each jurisdiction in Maryland, each type of violation, each individual violation instance, each site that received a violation and a detail page for each violation. I used the JavaScript library Leaflet to create maps on each site page that show each violation site's location.

This news app lives in a GitHub codespace, which means instead of existing locally or on a server, it can be deployed using GitHub's cloud-based infrastructure. Below are screenshots of what my app looks like when deployed.

Click here to find instructions on how to run the application and click around in it yourself, read documentation and look at my code. Click on any of the images below for a better look at the app.

I originally started this project for my news applications class in spring 2024 and continued to work on it after the semester ended.

This basic scraper uses Python's BeautifulSoup and Newspaper3k libraries to grab the text from each of the more than 750 alerts across more than a decade on the UMD Alerts website and stores it in a csv file. Using keywords in the alert text and titles, alerts are categorized based on whether they are on- or off-campus as well as type, which includes robbery, gas line issues, indecent exposure, weather, assault or test alerts.

The scrape is automated to occur every three minutes with GitHub Actions so each alert can be grabbed as close as possible to when it is uploaded to the website.

Look at the csv, my code and read my documentation here.